The Motive

Related pages

An update six years later, January 2025

This post was written a little more than six years ago. It pretty much stood the test of time. Of course, the number of cores in a GPU is obsolete. The achievements of deep learning have been amazing, we all use LLMs all the time. Platform-wise, other than price/performance improvement and many small advances, the big picture hasn't changed. We keep it as it is since it helps understanding that what motivated us in 2018 still does.

Of course, with Jazz 1.25.1 around the corner, Jazz is a way around some of these issues that is already practical.

AI winter (maybe) coming

Does AI live in the perfect world businesses and mainstream media are telling us?

AI is living the most exciting moment in decades. All the necessary tools to explore, train and deploy deep learning models are free software. They combine highly optimized low level CPU and GPU software with highly expressive and productive scripting languages on top of it. This allows AI researchers to try new ideas and publish results every day.

Meanwhile, in the same planet ... Most important AI researchers, we just name three to avoid digressing, agree that we still need drastic changes before we can solve problems such as common sense reasoning and many more. Humans can solve these tasks even as kids.

Geoffrey Hinton: "'Science progresses one funeral at a time.' The future depends on some graduate student who is deeply suspicious of everything I have said."

Yoshua Bengio: "Engineers and companies will continue to tune getting slight progress. That's not going to be nearly enough. We need some fairly drastic changes in the way that we are considering learning."

François Chollet: "For all the progress made, it seems like almost all important questions in AI remain unanswered. Many have not even been properly asked yet."

The "Good Old Fashioned AI" argument is partial at best

Here is how it goes

We don't cite anyone as its author. The argument just got repeated to the point it is common belief. It goes: "Long time ago, we as humans coded the solutions to the problems, that's GOFAI (Good Old Fashioned AI), we figured that was a dead end and switched to end-to-end optimization. Now, data makes the difference, and the machine creates the model using deep learning." Many even state that, today, major AI players can only be the data owners.

The least important thing is how this argument is deeply misconstrued and unfair to all the researchers and achievements AI produced for over sixty years ~1950-2013, even if some of these achievements were truly game changing. We all got used to take them for granted. That's what AI has always been about: the next problem.

The important thing is that even in the fields in which DL (Deep Learning) produced major advances, as in computer go this vision is partial to the point of not being true. AlphaGo, the program that won against Lee Sedol, the best player in the world, is a mix of Monte-Carlo Tree Search and DL. Without the DL it is still stronger than almost the whole human population and as strong as the best professionals in 9x9. With only the DL and no search, it just sucks. It becomes a player that plays strong and elegant moves, but does not understand why or when they should be played. It is like a person trying to pretend being a physicist by repeating random phrases taken from academic papers in physics. Being good at predicting the moves of a master is not the same as mastering the game.

Deep learning is great, but hard problems require more than just DL. You may be think: "I can do other things with current platforms easily." Well, easily, maybe, but not efficiently which is our point.

(Not really) a digression on rectangles

We immediately recognize rectangular shapes as man made.

They can be good solutions to optimizing some problem.

Some have more aesthetic value than others.

And we do not just build objects as rectangles ...

... we abstract ideas as rectangles

- We build our data sets as rectangles. (like sheets and tables)

- Images and videos are rectangles. (in higher dimension)

- Neural networks are rectangles. (of units)

- Even words in natural language processing are rectangles!

Believe it or not, the latter is true. In the online version of the Cambridge Dictionary, the many definitions of the word do take 442 lines, the word think takes 143. With just 4 you can define pneumonoultramicroscopicsilicovolcanoconiosis. In deep learning, they all take the same: one row from one rectangle.

And that, of course, is very good, because humans are very efficient pushing rectangles into other rectangles.

We build rectangular buildings out of rectangular elements, store the energy from the rectangular panels in rectangular arrays of batteries that, again, are rectangular arrangements of rectangular cells. In AI, we push our data rectangles into rectangular GPUs where we compute rectangular arrays of artificial neural networks.

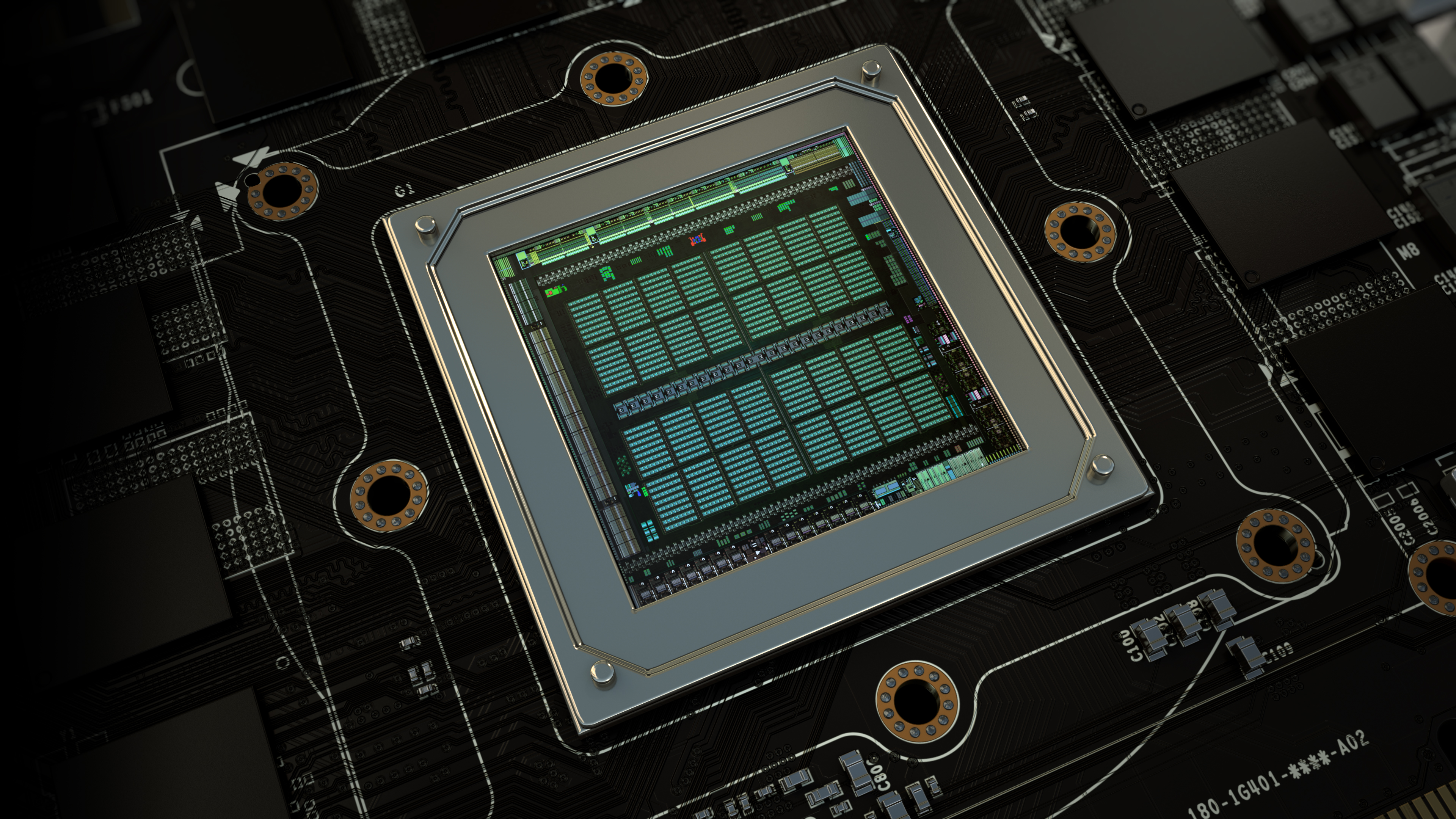

This beauty is a GPU. If you enlarge it, you can count 2048 cores.

You may be surprised to read that all the 2048 cores of the GPU not just execute the same program, but they do it exactly at the same time. When one of the cores, as a result of a conditional branch, takes a different logical path, all the other cores wait until it finishes. Cores can only execute the same instruction as the others or do nothing.

And surprisingly, many writers seriously claim that artificial neural networks are biologically inspired. But that would be a digression.

So, how is the "perfect world" of AI broken?

It is broken in different ways:

- Current platforms are only near optimal in one direction. Computing billions of similar operations (neural networks) very fast fitting a design that is in most cases fixed. If we put the complexity at the bottom, like "each word in a language has its own definition", the whole idea doesn't work anymore. GPUs become useless to speed up. Remember, a GPU is not a CPU with more cores, it imposes an orchestrated execution model.

- Even when we compute "just neural networks", its efficiency depends on us pushing rectangles into other rectangles. I.e., stacking fully connected layers without considering alternatives. There is ongoing research in sparsity, which was not considered three years ago. It is usually applied to compress models, sometimes by 90% with almost no performance loss, after training, GPUs and sparsity don't go along well!

- Current platforms do not have efficient mechanisms to try ideas on "just a few cases". You can, of course, build smaller data sets to explore ideas. Some hypotheses such as "An elephant is heavier than an ant." inherently require less cases to be verified than others like "Martian gravity influences athletic records.", Statisticians call that effect size. This is not minor, setting up even small models on these platforms has a high computational cost (many orders of magnitude compared to some evaluations on already loaded data). There is no easy way around it, large corporations simply throw in as much computation as they can (millions of dollars in computing). This has reached the point that some researchers believe AI research is about budget. And they are right in a way, at least when you are trying to do the same thing others are doing and your only distinction is having less resources, not a good strategy!

We should increase interoperability of what we already have.

One giant leap for mankind ...

... was the invention of the file. It happened in different steps, starting in 1940 and only resembles current file systems since 1961. Today, we have amazing free software tools to process all kinds of files, but the creation of data pipelines is still tedious and error prone. We have to manage many "unknown file type, wrong version, option not supported, unexpected syntax" errors, wait until hundreds of gigabytes get decompressed just to check image sizes, etc.

We could wait until computers spontaneously get so smart that they guess what we want and do it for us using the right tools, with optimal arguments ...

... or just make the whole process machine understandable.

And the same applies to APIs

![]() We could improve on standardized ways to abstract

API details. Finding such things as: language, timestamp, expected expiry time, units, etc. Besides that, creating mechanisms to ask for

updated data in consistent ways, are things that add value and simplify data processing.

We could improve on standardized ways to abstract

API details. Finding such things as: language, timestamp, expected expiry time, units, etc. Besides that, creating mechanisms to ask for

updated data in consistent ways, are things that add value and simplify data processing.

Putting it all together

We identified five opportunities for improvement over current platforms for all of us. And we discuss how Jazz addresses them in:

Nobody knows what AI needs to become True AI, but we do know a lot about building data solutions. The platform should ideally help experimentation while providing technology to build data engines in production environments.

- The efficiency of current systems is broken, unless all you need is pushing rectangles into other rectangles.

- Current platforms can sometimes be more a hindrance than a help in the exploration of new ideas.

- Current solutions are not giving any thought (at platform level) to efficient quick exploration.

- Working with different kinds of data files and services is tedious and error prone.

- EXTRA! We have to build platforms with code generation and lifelong learning in mind.